Summary

Picos are a natural way to build microservices. This post presents the results of an experiment we ran to see how the new Pico Engine performs when placed under surge loading that simulates BYU's priority registration.

Every semester over 30,000 BYU students register for two to six sections out of about 6,000 sections being offered. During the priority registration period this results in a surge as students try to register for the classes they need.

One proposed solution for handling the surge is to create a microservice representing sections that can spawn copies of itself as needed. Thinking reactively, I concluded that we might want to just have an independent actor to represent each section. Actors are easy to create and the underlying system handles the scaling for you.

Coincidentally, I happen to have an actor-style programming system. Picos are an actor-model programming system that supports reactive programming. As such they are great for building micorservices.

Bruce Conrad and Matthew Wright are reimplementing the KRE pico platform using Node JS. We call the new system the "pico engine." I suggested to Bruce that this class registration problem would be a fun way to test the capabilities of the new pico engine. What follows is the results of Bruce's experiment.

Experimental Setup

We were able to get the add and drop requests for the first two days of priority registration for Fall 2016. This data included 6,675 students making 44,505 registration requests against 5,393 sections.

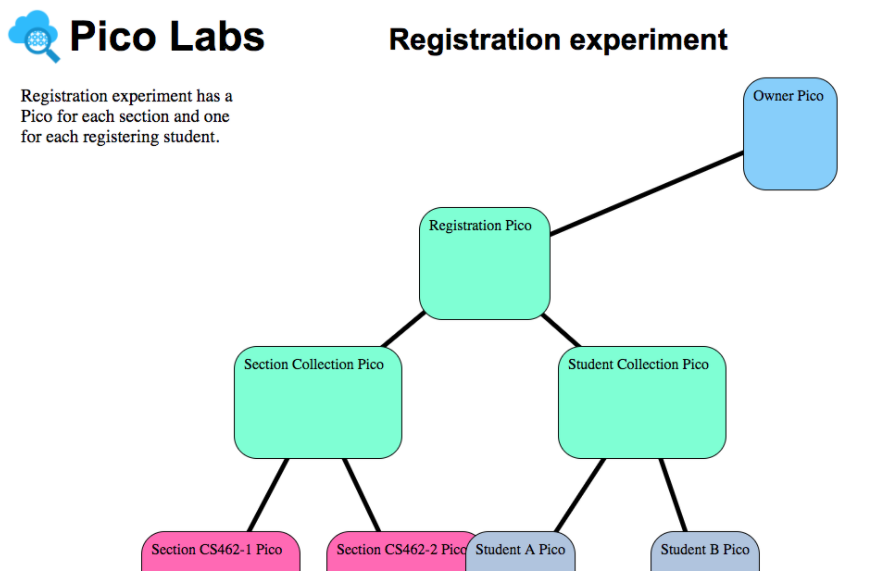

The root actor in a pico system is called the Owner Pico. The Owner Pico is the ancestor of all other picos. For this experiment, Bruce created two child picos for the Owner Pico to represent the collection of sections and the collection of students. The Section Collection Pico is the parent of a pico for each section. The Student Collection Pico is the parent of a pico for each student. The resulting pico system looked like the following diagram from the Pico Engine's developer console:

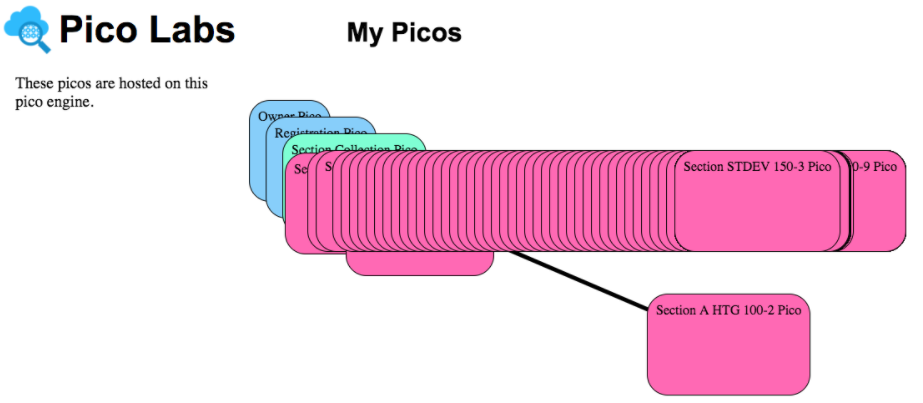

Bruce chose not to create picos for each student for this experiment. He programmatically created picos for each section. The following figure shows the resulting pico graph:

Each section pico has a unique identity and knows things like it's name, section id, and seating capacity. The pico also keeps a roster of students who have registered for the course. The picos instantiated themselves with this data by calling a microservice with the data.

Using this configuration, Bruce replayed the add/drop requests as events to the pico engine. Events were replayed by sending an section:add or section:drop event to the Section Collection Pico (which routed requests to the individual section picos).

After the requests have been replayed, we can query individual section picos to ensure that the results are as expected. This figure shows the JSON result of querying the section pico for STDEV 150-3. Queries were done programmatically to verify all results.

Results

Bruce conducted two tests using a pico engine running on a Macbook Pro (2.6 GHz Intel Core i5).

The first replay, done with per-event timing enabled, required just under an hour for the 44,504 requests. The average time per request was about 80 milliseconds (of which approximately 30 milliseconds was overhead relating to measuring the time).

A second replay, done with the per-event timing disabled, processed the same 44,504 add/drops in 35 minutes and 19 seconds. The throughput was 21 registration events per second or 47.6 milliseconds per request.

For reference, the legacy system, running on several large servers, sustains a peak rate of 80 requests per second, while averaging one request about every 3.9 seconds.

These tests show that the pico engine can handle a significant number of events with a good event processing rate.

Conclusions and Going Further

Based on the results of this experiment, I believe we're closing in on the performance goal we set for Pico Engine rewrite. I believe we could achieve better results by running picos across multiple engines. Since every pico is independent, this is easily done and would avoid resource contention by better parallelizing pico operation.

The current pico engine does not support dynamic pico assignment where any free engine in a group can reconstitute and operate a pico as necessary. KRE, the original pico platform, does this to make use of multiple servers for parallelizing requests. The engineering to achieve this is straight forward.

If you're interested in programming with picos, the quickstart will guide you through getting it set up (about a five minute operation) and the lessons will introduce you to reactive programming with picos.

Photo Credit: Maesar Building from Ken Lund (CC BY-SA 2.0)